Technical Overview¶

There are three (3) core competencies of MedStack Control that technical teams must understand before deploying and maintaining workloads on MedStack Control clusters.

Networking¶

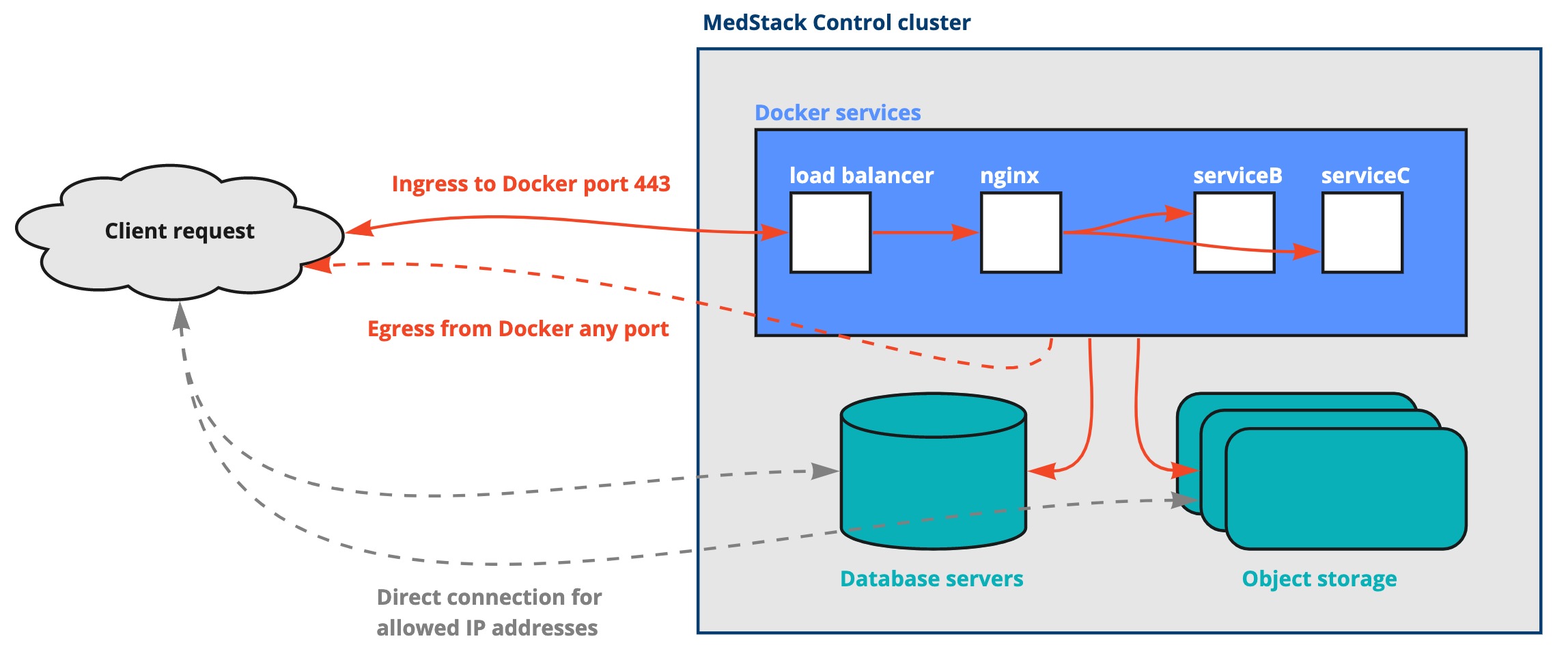

Traffic can only ingress to Docker services on MedStack Control via port 443. This restriction is imposed on the firewall and load balancer rules and cannot be changed. Traffic can however egress from the Docker network over any port.

To learn more about clusters and their networking rules, please refer to the clusters build page.

Deploying to a cluster¶

Deploying to a MedStack Control cluster requires three (3) simple actions:

- Establish a deployment pipeline or strategy

- Configure a service and dependencies

- Pull from the container image registry

📘 Add registry credentials for private registries

Most teams store their container images in a private registry. Each user must add a set of registry credentials to their MedStack Control account as they're used to authenticate with the registry when fetching images.

📘 How To Configure CI/CD on MedStack

Check out our E-Book on How To Configure CI/CD on MedStack for a comprehensive guide with examples on building a pipeline that deploys to MedStack Control clusters.

The deployment pipeline or strategy¶

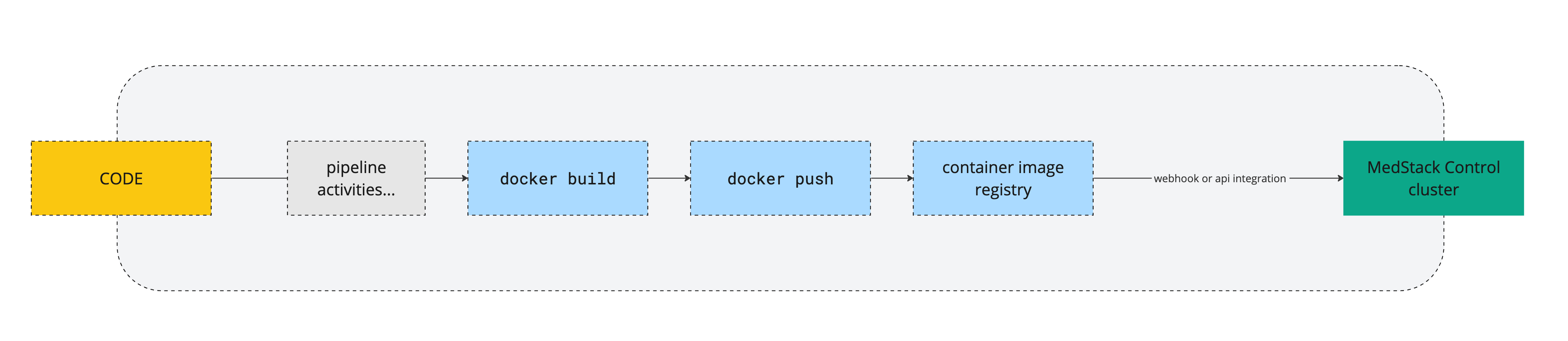

Technical teams have the freedom to own their deployment pipelines, strategies and systems that manage their code and container images. All deployment pipelines and strategies contain these four (4) core steps.

- Code repo branch marked for deployment

- Pipeline activities (unique to each team and application workflows)

docker build– the step where the code is built into a Docker container imagedocker push– the step where the container image is sent to a registry- Container image registry receives new container image

- MedStack Control pulls the container image by downloading it to the cluster and instantiates a service

Configure a service and dependencies¶

Services and their dependencies can be updated in the deployment pipeline. There are dependencies that can be configured in MedStack Control during the deployment pipeline, and dependencies that depend on the application layer.

MedStack Control dependencies¶

MedStack Control will require information about the service (especially the registry's web address, image name and tag or digest), as well as any secrets, configs, and volumes the service is configured to utilize.

Application layer dependencies¶

While the number of application layer dependencies can be vast, some common dependencies include:

- Database migrations

- Runtime scripts

Handling these dependencies may require some interaction with the Docker variables accessible in MedStack Control, but most often are handled in the application layer or deployment pipeline independent of the target environment.

Pull from the container image registry¶

There are two (2) main methods for pulling container images from the registry:

- Trigger a service webhook

POST,PUTorPATCHAPI request to the /service endpoint

Pulling a container image from the registry into the MedStack Control cluster can be triggered by the update methods above. Once the image is downloaded to the cluster, the service will attempt to start by spinning up a service instance as a container.

Docker on MedStack Control¶

We chose to build Docker into MedStack Control for these reasons, in addition to the granular control available in Docker that allows us to provide privacy compliance guarantees when running your applications on MedStack Control.

Benefits and limitations¶

There are some important concepts to understand regarding bringing your applications into MedStack Control and the boundary conditions for working with Docker on our platform.

-

You do not have access to execute Docker commands – this is by design to guarantee privacy compliance per the policies which you inherit by running your applications on the MedStack platform.

-

Rather, you have access to shell into containers – once you've deployed a service in your Docker environment, you may SSH into containers and interact with them and connected services and resources via web terminal.

-

Your Docker environment is automatically backed up and restorable – built for disaster recovery and ransomware attack mitigation, our backup system captures snapshots of the entire Docker environment every hour, ensuring a reliable state of your configuration and that data is always available under any circumstance.

-

Your Docker environment runs in a protected virtual network – your application on MedStack Control can be made accessible to the open internet knowing it's protected by enforced encryption, DDOS and IP spoofing mitigation, and accessible to select resources to which only you and your team have access.

-

Your Docker nodes and the Docker network and orchestration are managed by MedStack – we take care of securing, patching and updating the host machines that power your Docker environment so you only need to focus on the application layer.

-

You will manage the Docker configuration and services in MedStack Control – as you develop locally, keep in mind the Dockerfile or Docker Compose configurations as you'll need to translate them into the Docker environment on MedStack Control.

Are you new to Docker?¶

If you're familiar with Docker, then you're likely familiar with the vast benefits of running on its platform.

If you're new to Docker, you'll find Docker's getting started resources to be an excellent place to become familiar with the platform. We strongly recommend you utilize the following resources:

- ✅ Get Started with Docker

- Download and install Docker Desktop

- Set up a Docker Hub account

- Learn the ropes

- ✅ Orientation and setup

- ✅ Docker application tutorial

Why Docker?¶

With a community of millions of developers, Docker is one of the easiest platforms to use for building modern applications. Docker is a platform for developers and sysadmins to build, run, and share applications with containers. The use of containers to deploy applications is called containerization. Containers are not new, but their use for easily deploying applications is.

Containerization is increasingly popular because containers are:

-

Flexible: Even the most complex applications can be containerized.

-

Lightweight: Containers leverage and share the host kernel, making them much more efficient in terms of system resources than virtual machines.

-

Portable: You can build locally, deploy to the cloud, and run anywhere.

-

Loosely coupled: Containers are highly self sufficient and encapsulated, allowing you to replace or upgrade one without disrupting others.

-

Scalable: You can increase and automatically distribute container replicas across a data center.

-

Secure: Containers apply aggressive constraints and isolations to processes without any configuration required on the part of the user.

Additional resources¶

The following Docker resources are available to you to learn more about how Docker works as an enterprise container platform.

- Interactive – Docker IT Administrator Questions

- Whitepaper – Definitive Guide to Enterprise Container Platforms